Mastodon: A Social Media Platform Dominated By Pedophiles & Child Porn

Following a Secjuice investigation, it has become clear that Mastodon is a social media platform dominated by pedophiles and most of its content is child porn.

Believe me when I tell you that I did not want to write another article about pedophiles after the last one, but here we are. It is a vile subject to read and write about, the rabbit hole goes deeper than any of us ever want to think about, and when I say us I really mean me. This will be the last article I will ever write on the subject of pedophiles and child porn, and I feel tainted for having touched it.

mastodon is probably mostly pedophiles

— ben (@benjimadness) April 26, 2022

Introduction

Shortly after Elon Musk took over Twitter some of the most influential people in infosec began tweeting that they were leaving Twitter. I don't think the reasons why they are pretending to leave are important, but I do think it is important we take a closer look at the social media platform they are desperately encouraging their followers to sign up on so they can have an audience.

In their haste to flee Twitter these influencers set up accounts on a social media platform called Mastodon without knowing much about the place they were migrating to, or anything about the kinds of communities that call Mastodon their home. To be fair, Mastodon makes it almost impossible to search for communities and content across the fediverse by design, so many of the newcomers have absolutely no idea who lives on Mastodon because they cannot see them.

Consider this article the first in a series of Secjuice articles focused on the Mastodon platform and the communities which call Mastodon their home. As our OSINT efforts against Mastodon ramp up we will publish more OSINT-focused articles around the subject, the technical folks are busy getting stuck in too and I am certain they have some great articles headed your way. If you are an OSINT researcher interested in Mastodon, check out this interactive map of the Fediverse, this diagram of the Mastodon attack surface created by Sinwindie, and this tool for gathering intel on Mastodon users built by OSINT Tactical.

A Short History Of The Fediverse

The founder of Mastodon, Eugen Rochko, didn't invent the concept of a decentralized social network he just built his own platform implementation of OStatus, an open standard for federated microblogging. OStatus was built to provide an alternative to Twitter, but instead of being controlled by a single commercial entity like Twitter is, it was controlled through a federation of 'independent instances'. If this sounds familiar it is because, quite often, new technological ideas are just old ideas wearing a new pair of shoes.

#Mastodon has a deeply troubling history of being a safe haven for child sexual abuse material (CSAM). The communities focused on CSAM content were a significant driver in the platforms early growth and one of those child porn groups became the largest instance in the fediverse.

— Secjuice (@Secjuice) November 9, 2022

The fediverse that Mastodon users live in was (arguably) created by an American called Evan Prodromou who was the first to launch a distributed social platform (Identi.ca) followed by the first federated social networking protocol (Pump.io), which over time, and through various iterations evolved into OStatus, before later evolving into ActivityPub, a social networking protocol developed by the WWWC.

Mastodon is the most well-known platform implementation of OStatus/ActivityPub, but before Mastodon everyone used GNU Social. Older Twitter users will remember GnuSocial as the place the very first wave of Twitter exiles migrated to during the first great exodus of users with a strong political persuasion who no longer felt welcome on Twitter. People were leaving Twitter to build their own federated OStatus networks using GnuSocial instances, but then in 2016 Eugen launched an OStatus platform called Mastodon which quickly gained traction because he skinned it to look like a version of Twitter.

Users of the OStatus fediverse could now migrate from clunky old GNU Social to Mastodon that looked just like Twitter. Eugen didn't build the fediverse, and he wasn't the first to build an OStatus platform, but he did make the fediverse more accessible and user-friendly to newcomers, to the point where by late 2017 newcomers to the fediverse had started calling the network and the protocol Mastodon, which is a bit like calling Facebook the internet.

Mastodon is the most popular platform on ActivityPub, a network protocol for federated microblogging that underpins the foundation of the fediverse, making Mastodon a social media platform on the network rather than a protocol.

The Great Pedophile Invasion Of 2017

You may think a term like "the great paedophile invasion" sounds ridiculously hyperbolic, but an invasion is exactly how the Mastodon community described it at the time. On April 14th 2017, Mastodon users across the fediverse started to see what users described as a "flood of child porn" federating across their instances, generated by what users described as "an organized invasion of paedophiles".

It must have really felt like an organized invasion to the one-year-old Mastodon community at the time (approx 160k users) because the number of people on Mastodon suddenly grew by approximately 140k users, they were an inch away from doubling the Mastodon userbase over the space of one weekend.

Even worse, on April 17th the two largest instances these invaders lived on became the first and second largest instances in the fediverse, overtaking the mainstream mastodon.cloud and mastodon.social instances in the process, and making up roughly 40% of the total fediverse population, or 50% if you included their rapidly sprawling network of federated satellite instances. In orbit around the two big child porn Mastodon instances sprung up hundreds of smaller instances containing an additional 40k users distributing the same sort of content.

This caused what was described as an "unhinged hysteria" to develop among Mastodon users because the new users seemed determined to flood the fediverse with child sexual abuse material (CSAM). Less than a week later on April 24 the total number of posts on the largest of the child porn 'invader' communities overtook the total number of posts on mastodon.social in its entire existence. Not only were the invaders hell-bent on spreading child porn around the fediverse, to everyone's horror they seemed to be generating insane amounts of it.

Child Porn Communities Continue To Dominate Mastodon

Flash forward to 2022 and those two giant Mastodon child porn communities currently rank second and third on the fediverse leaderboard in terms of user numbers, making up the two largest instances on Mastodon after mastodon.social which holds first place with more users. But they are the two most active communities by a large margin in terms of total postings, ranking first and second with 60 million posts each against mastodon.social's 40 million posts.

like i don't know how to effectively describe how filled to the brim mastodon is of actual, literal pedophiles, racists and just all-out psychopaths where the content is not moderated, actively promoted and incredibly easy to come across unwillingly

— Lean Scotch Kenny G (@teyrns) November 6, 2022

These large communities are the visible tip of the Mastodon child porn iceberg, orbiting in a federation around these large instances are thousands of smaller groups federated into their ecosystem, these are the more extreme child porn communities. None of us really wants to explore that part of the fediverse and it makes us all sick to our stomachs knowing that there are so many of those communities on Mastodon. Not only do they make up more than half of the Mastodon userbase, but they are also the busiest communities in the fediverse.

I don’t really want to move to mastodon

— Ryn (@rynsrevels) November 5, 2022

I’ve kicked several pedophiles off the discord server I mod and guess where ALL of them went

Here is a glimpse into the world of pedophilia communities on Mastodon.

Why Can’t You See The Pedophiles On Mastodon?

Because the search function on Mastodon is broken by design, it is set up so that new users cannot see all of the existing users, their content and their communities in the fediverse by default. You have to federate with your fediverse neighbours in order to see their content, and to federate with them you have to know exactly where they are or you will never know you share a platform with them.

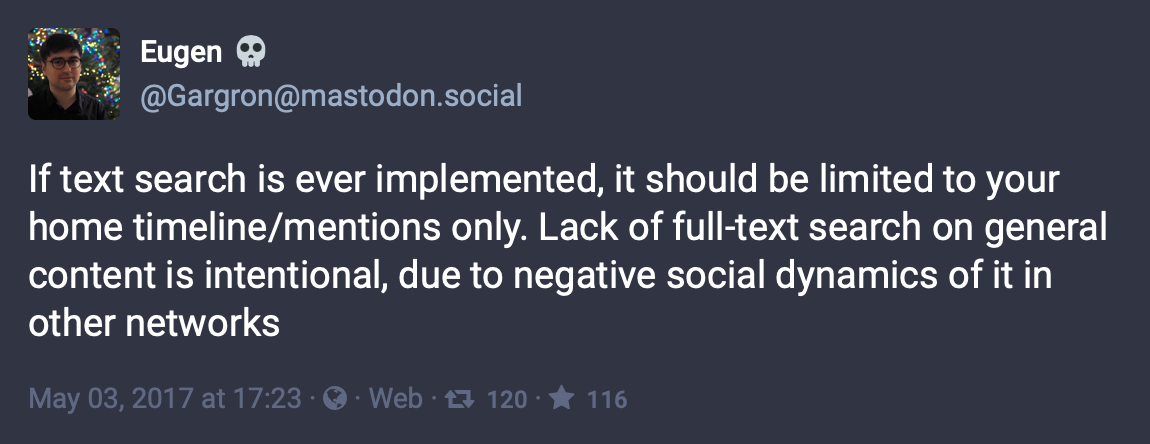

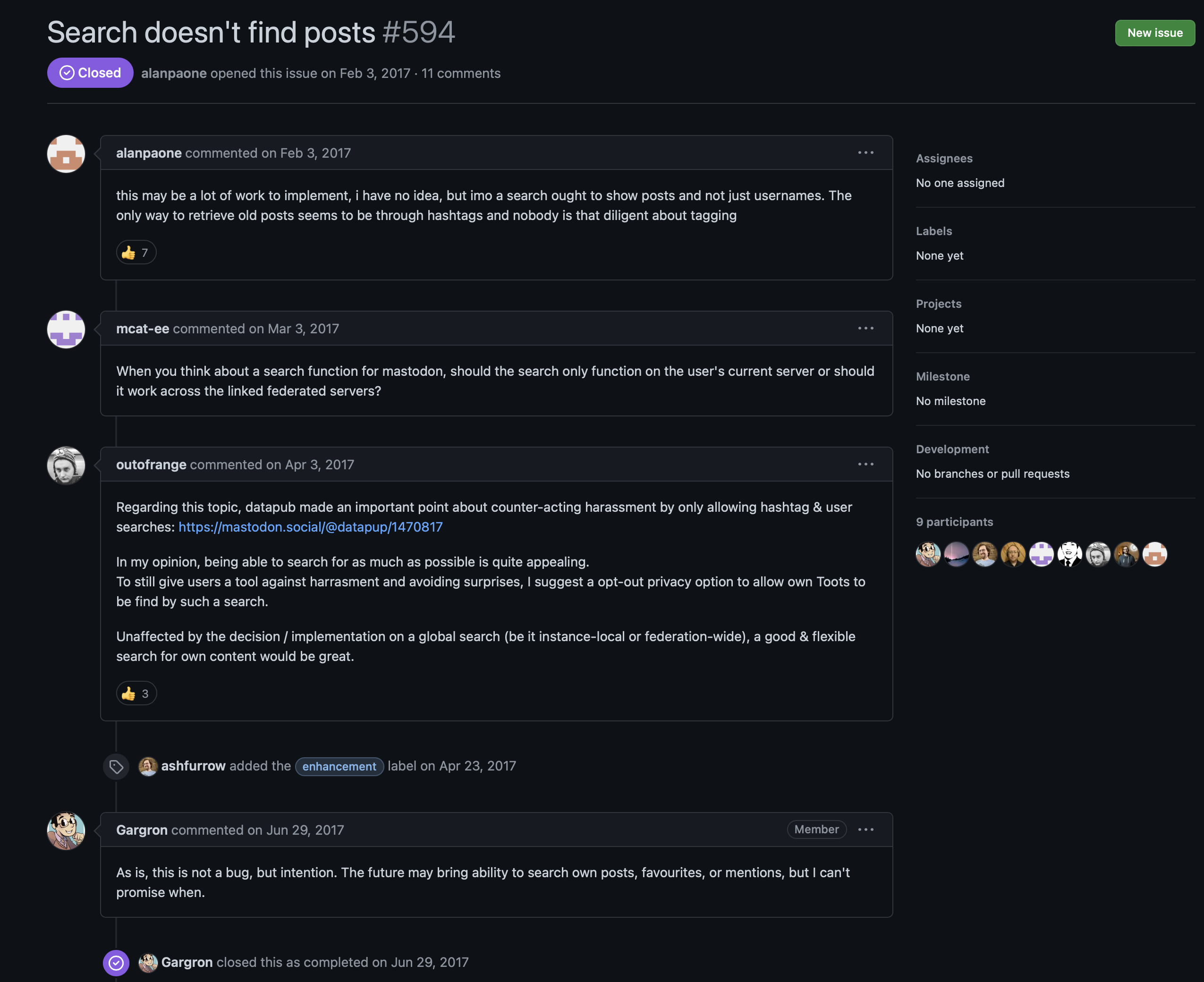

The founder of Mastodon clearly doesn't want search to work, he has made it clear that the lack of search is by design and not a bug. Here he is telling others that the lack of search functionality is intentional "due to negative social dynamics".

He means the negative reaction of Mastodon users when they see all the CSAM.

A while back someone built a search tool that allowed Mastodon users to ‘globally’ search the Fediverse in a comprehensive way, but the Mastodon community totally freaked out about it and the tool was retired. Because Mastodon is home to the vilest, most abusive, extreme, and illegal communities on the internet the developers know that new users cannot be allowed to see who they are sharing the fediverse with, because if they knew it would sicken them to their stomachs.

This is how you accidentally moved to a pedo platform without realizing it.

If you do not believe me and want to learn how to find them, read this article.

The Crazy True Story Behind The Invasion

But who are all of these pedophiles, where did they come from, and why did they suddenly decide to invade Mastodon and build communities there? I have spoken to quite a few people about this, the general consensus is that a synchronicity of circumstance is what drove hundreds of thousands of child porn fans into an 'invasion' of Mastodon and compelled them to build a home there.

The above map is of known Mastodon instances back in August 2017, take one glance at the map and you will notice that most of the Mastodon userbase lived on servers in Japan. In August 2017, three of the top five instances were hosted in Japan and they collectively represented 60% of Mastodons user population.

Twitter has always been the biggest social platform in Japan, more Japanese people use Twitter than they do Facebook, and Japan is the only country in the world where this is the case, in Japan Twitter reigns supreme. Similarly, Mastodon is really big in Japan (but for really uncomfortable reasons), and that is why the Japanese dominate its user base. Most of the Mastodon pedophiles who particiapted in the great paedophile invasion of 2017 were Japanese, but why on earth did the Japanese decide to invade Mastodon and flood it with child porn?

The Japanese Are *Really* Into Child Porn

In Japan they do not feel as strongly as we do about pedophiles, and they only made possession of child porn illegal in 2014. Before then everything was legal and generations of Japanese grew up thinking child porn was nerdy rather than immoral or illegal. Just eight years ago you could be caught by the police watching hardcore child porn and you wouldn't get into any legal trouble for it.

Even though the law had been passed, in 2016 a human rights organization published a report which found that "the laws have not been sufficiently implemented due to lack of enforcement" and that "child pornography materials are available at stores in the Tokyo area as well as on the (.jp) internet". The UN and human rights activists were calling on Japan to crack down.

Although the law was passed in 2014, it wasn't until mid-2016 that the authorities finally began to clamp down hard on the illegal kinds of child porn, which is what drove so many Japanese paedophiles to migrate onto Mastodon in early 2017, they knew that the authorities were finally beginning to take the issue seriously. They had to, things were really bad and Japan was a global leader in child porn.

This report on the scale, scope and context of the sexual exploitation of children in Japan by ECPAT, an NGO focused on ending the sexual expolitation of minors, gives you a sense of what Japan was like back then.

A recent deterioration in the gap between the rich and poor has resulted in increased child poverty and in children falling victims to sexual exploitation, notably through prostitution. Japan is also notorious for producing child sexual abuse material (CSAM), moreover, Japan is considered a destination, source, and transit country for sale and trafficking of children for sexual purposes. Many trafficked children are forced into prostitution and production of CSAM.

Japan didn't even make all child porn illegal either, they only made photos and videos with real children in them illegal, computer-generated imagery of children, no matter how sexual, is not illegal in the eyes of the law. Neither are animated cartoons, drawings, or physical sex dolls that resemble children.

Here is a Vice documentary that takes a closer look at this industry in Japan.

In the West the possession of any kind of sexual imagery involving children is not just a strong cultural taboo, it is also illegal. Those who trade in and create sexualized imagery of children are very obviously pedophiles, and it doesn't matter if that imagery is hand drawn, computer generated or a photograph, we class it all as child pornography, or what we now call child sexual abuse material (CSAM).

Japan's footdragging and legal loopholes drew criticism from overseas with prominent NGO's and human rights groups describing Japan as “an international hub for the production and trafficking of child pornography” despite the new laws. But in 2016 when Japan suddenly cracked down hard and arrested a record amount of pedophiles panic spread through the pedophile community. When a hugely popular lolicon image hosting site called Pixiv joined Mastodon in April 2017, it acted as the catalyst for the community to invade en masse.

Because Mastodon was skinned to look like Twitter, and because the Japanese love Twitter, it seemed perfectly natural for them to flee the law and move to Mastodon where they could post, create and share child sexual abuse material without any administrators banning them or reporting them to the police.

The pedophile groups needed a safe harbor from the child porn possession laws, a safe social space to share and enjoy their content, and they found a home in Mastodon where these groups have been flourishing ever since.

The Developers Had To Hide Them

In the face of this invasion of Japanese pedophiles the Mastodon developers created a Github issue and started to discuss the child porn problem, their conversations focused on the legal consequences of having child porn on their local server instances but the Japanese seemed totally unconcerned by these consequences and argued that some kinds of child porn were legal in Japan. The Japanese refused to stop federating their content, or ban anyone from their instances, but they did agree to put a ‘mature image’ label on their content so children couldn't see it and proposed an age-verification system for Mastodon.

The Mastodon developers immediately implemented new features and made it easy to filter out all of the child pornography, they also crippled search. These combined efforts help Mastodon hide the huge pedophile population on their social media platform. Because there is no central Mastodon authority nobody can stop the pedophiles from using Mastodon, and because there are so many pedophiles on Mastodon, the developers decided that it was best to just go ahead and hide them. They had to hide them if they wanted their platform to grow.

Which is how you accidentally moved to the pedoverse without knowing it.

Mastodon instances are populated by social media users whose sexual tastes are too extreme or illegal for mainstream social media platforms, and over time these social media users have found a safe harbour on Mastodon. This is the reason why I am not moving to Mastodon and why influencers really shouldn’t be encouraging Twitter users to go there just so they can have an audience to like their posts.

The largest community of pedophiles on the internet call Mastodon their home and consider Mastodon a safe space for child porn. It doesn't matter that you can't see them, as a Mastodon user you are sharing a social media platform with them.