The Ethics of China's AI-Powered Surveillance State

Discover the intricate dynamics of China's AI-driven surveillance state, exploring the convergence of facial recognition technology with governmental oversight and its profound implications on global human rights and individual privacy.

The Ethics of China's AI-Powered Surveillance State: The use of AI and facial recognition technology by the Chinese government to monitor and control its citizens, and the potential implications for human rights and privacy

How much does your government spy on you? How much of your private data does the government have? In the realm of our digital lives, where technology and privacy intersect, the narrative of privacy invasion has been a topic of intrigue – and at the centre of it all is the government that has faced the most scrutiny: China.

While the US government is investigating China's involvement in unethical surveillance practices; the spy balloon and the strength of their access to TikTok users' data, there are other disturbing issues. Especially their questionable use of AI surveillance technology within China. The use of facial recognition technology to monitor and control their citizens has drawn international attention.

China's surveillance program, known as "Sharp Eyes" uses a top-level system that is unprecedented; the merging of AI and facial recognition introduces a new level of security surveillance, especially for public use. But while the Chinese government is playing "Keeping up the Chinese" read through as we explain the surveillance situation.

The Use of AI for Surveillance in China

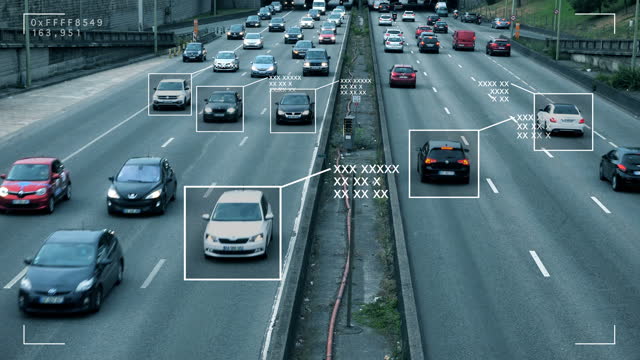

Since 2003, every major security project enforced by the Chinese government has involved some level of physical surveillance. From the Golden Shield Project to Safe Cities to SkyNet to Smart Cities. These security projects have amounted to the installation of over 200 million security cameras across China.

The Xueliang Project caught a lot of attention after its success in Pingyi County, south of the Shandong Province. In just three years, the government had a total of 28,500 security cameras installed throughout the county and recorded an improvement in general security. This motivated the Chinese government to announce their five-year plan of 100% coverage project under the title, “Sharp Eyes”. The Sharp Eyes program involves the installation of millions of cameras that are monitored by AI and facial recognition technology in public spaces.

Implications for Human Rights and Privacy

The Chinese government's use of facial recognition technology extends beyond its use in surveillance. This technology has been integrated into various spheres, including tracking school attendance, monitoring employee performance in workplaces, and validating the identities of mobile phone users.

In a significant development, the Chinese government introduced a privacy protection standard in 2018. This standard highlights the mechanics of data collection, storage and explicit guidelines regarding user consent. However, concerns remain as the existing privacy laws do not adequately address the potential limitations on government's use of private data and facial recognition technology.

Furthermore, the standard includes more demanding obligations compared to the European Union's General Data Protection Regulation (GDPR), granting Chinese authorities a significantly broader range of authority.

In an interview with NPR’s Rob Schmitz, an executive from a pioneering facial recognition company claimed that cameras are strategically positioned at a height of 2.8 metres above the ground to avoid capturing human faces, in line with established regulations. However, the deployment of AI surveillance in China has raised concerns, particularly regarding its role in the oppression of Uyghurs in Xinjiang. This involves profiling individuals based on their appearance and closely monitoring their movements.

These revelations not only underscore the ongoing challenge of harmonising technological advancements with the protection of human rights and privacy but also shed light on the darker application of technology in discriminatory practices.

International Response to China's Use of AI for Surveillance

Amidst the unfolding developments, one response has resounded with unwavering intensity—the United States.

US Defence Secretary Mark Esper and Republican and Democrat lawmakers voiced worries in a congress in January 2020 about China's expanding surveillance capabilities. This anxiety inspired the "End Support of Digital Authoritarianism Act," which sought to limit some corporations' participation in the Face Recognition Vendor Test. In the meantime, the US Commerce Department's decided to place Chinese firms and government organisations on a blacklist because of their involvement in Xinjiang human rights violations.

However, the US's strategic manoeuvres to counteract China's strides are perceived to be driven by competition in artificial intelligence technology and the motive to hinder China's leadership ambitions in the AI industry by 2030.

On the European front, the EU has adopted a more cautious approach, refraining from outright disapproval of China's employment of AI surveillance in Xinjiang. In Germany, it has been a back and forth scenario, with government officials struggling to strike a balance between crime control and data privacy. As a result, plans to introduce facial recognition cameras across transportation hubs, despite a successful trial at the Berlin-Sudkreuz station, have been temporarily halted.

France, on the other hand, has taken a proactive stand, unfazed by criticisms and GDPR-related concerns. In 2019, the Ministry of Interior signalled its eagerness to integrate facial recognition technology into the national digital identification program, Alicem. Rather than settling to the general data protection regulations, the French government is working to establish a legal framework for facial recognition in security and surveillance.

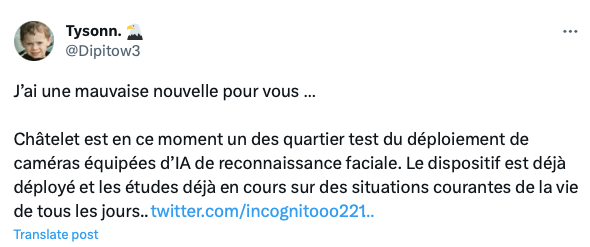

The recent riots in France have given rise to rumours regarding the continuous use of AI surveillance cameras in Châtelet, an area directly affected by the unrest.

The tweet translates,

"I've got some bad news for you ...

Châtelet is currently one of the test areas for deploying cameras equipped with facial recognition AI. The system has already been deployed, and studies are already underway on everyday situations.."

Future Developments of China's Use of AI for Surveillance

The trajectory of AI surveillance in China has catapulted the nation into the international spotlight, sparking intense scrutiny over its potential implications for human rights and privacy. Despite the global attention and reactions, the path ahead promises even more groundbreaking developments in China's utilization of AI for surveillance, projecting a future both intriguing and complex.

The Chinese government is well aware of the unspoken race to harness the transformative potential of AI. As international ambitions soar, China is poised to assert its dominance in the AI landscape, setting its sights on a staggering $147.7 billion valuation within the next seven years. At the heart of this pursuit lies China's 2017 New Generation Artificial Intelligence Development Plan, a testament to the nation's unwavering commitment to propel artificial intelligence to the forefront of global competition. As the plan boldly declares, "Artificial intelligence has become a new focus of international competition. Artificial intelligence is a strategic technology that will lead the future."

Beyond the grand narrative of global leadership, China's vision encompasses the creation of smart cities that intertwine AI surveillance across various dimensions of urban life. This vision extends further, casting AI as a key enabler in bolstering the nation's military capabilities. The integration of AI technologies promises to reshape the landscape of national security and defence.

China's ambitious journey enters its second phase, a period defined by pivotal milestones. The first phase, completed in 2020, laid the groundwork by formulating essential policies, ethical considerations, and novel AI theories and technologies. As the nation moves into the final phase, spanning from 2025 to 2030, the world watches with anticipation as China steers toward the realisation of its intricate blueprint.

Conclusion

As we've explored China's multidimensional path, it's clear that this journey has consequences that go beyond technological development.

From Europe's measured approach to France's proactive stance, nations are conflicted with the delicate balance between technological innovation and safeguarding individual rights. The intertwining concerns of social credit scoring and predictive policing add layers of complexity, illuminating the ethical and societal considerations that accompany the rise of AI surveillance. This shapes the contours of human rights, privacy, and the very essence of modern society.

China's AI surveillance story is just an example of the conflictions that will continue to occur as the world continues to advance technologically. A reminder to reflect, adapt, and chart a course that upholds our shared ideals in this evolving era.