Web Scraping And Why You Shouldn’t Publish Your Email Address

Learn about web scraping, why you should never publish your email address on the internet and what happens when your email has been scraped with Alessandro Innocenzi.

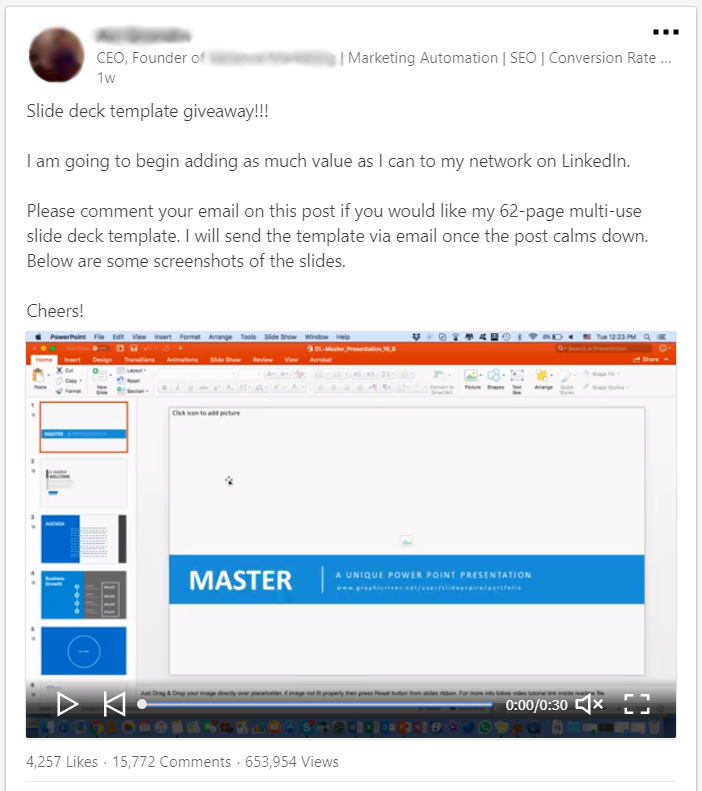

A few days ago I saw a gentleman on LinkedIn who asked his audience to do something that you should absolutely not do on a public social network, he asked for your email address in return for something.

At the time of writing, his original post has about 16,000 comments with each one pretty much containing an email address in. I want to specify that I’m not directly connected with this person, but I can see this post and all comments with the related email addresses, so everyone can do it with a LinkedIn account.

Don’t Be Tempted

You want this beautiful PowerPoint template, but you have to think about the consequences of publishing your email address in a public social network (or in your personal website), because there’s something called OSINT.

I could use your email on a search engine or use a specific tool like Maltego to create a map about you, your friends, your interests, your animals and so on, only from the personal data you publish everyday everywhere.

For example, using LinkedIn I could see what your job and your interests are, your connections, your industry and in most cases your hobbies too. A lot of information to attack you.

Attack? What kind of attacks could you receive? A lot, such as social engineering attacks or simply spam sending.

For example, I can define with an high percentage which address is personal and which isn’t. An address like [email protected] is most probably personal, while an address like [email protected] is a professional account. Then I could create a specific email for phishing, or create a malicious app or portal about your interests and send to you an email or message on LinkedIn (after connecting with some of your contacts, to make you believe I’m a trusted person) with an high chance you will use or try it.

Anyway, now you know the risks, but if you don’t, think a person save 16,000 email addresses from his comments…

Scrapers, Grabbers, Extractors, Or Whatever You Want To Call Them

Obviously, there are tons of services, tools and browsers extensions to easily extract data from web pages.

In our case, who wants to extract email addresses can simply use Grabby or others, but there are tools like LinkedIn Lead Extractor that save all personal data.

There are websites that prohibit the use of scrapers LinkedIn for example, but these tools are legal, since their main purpose is to create a list of potential customers for sales and business units. But, as I said, anyone could use them for other purposes, especially because these tools are so easy to use even non-tech people can steal information to sell to spammers or, even worse, to hackers.

Hack Your Way With CSS Selectors

If you’re a freak and want to create your own tool, there are a lot of solutions. For example you can use Scrapy, a web crawler framework in Python for extracting data from websites. The problem with this framework is that it works on the HTML elements using CSS Selectors, so you need to know how the HTML structure of the target website is. In addition, several websites change their structure frequently to avoid, or complicate, the work of scrapers (putting a new DIV element or change a name of a CSS class could force you to edit your script).

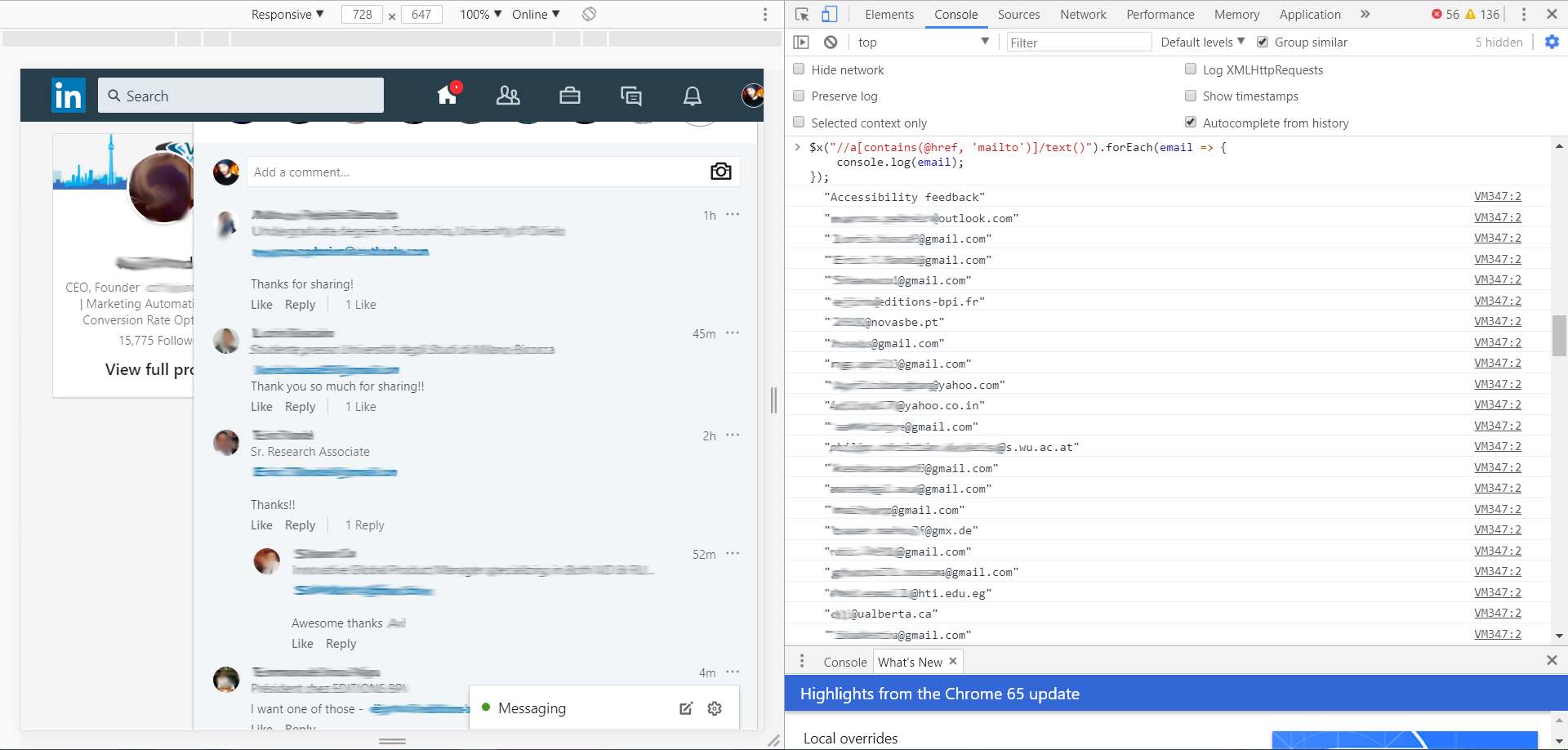

To learn CSS Selector in real time, use this simple code in Chrome console:

It produces a list of the email addresses as the following image:

Too easy :(

Too easy :(

You can edit and modify this line of code based on your needs!

More In General

But we don’t want to study the HTML structure, or even edit our script every change of the target website. So we’ll try to use another approach. Generally speaking, we simply have to load HTML, search a string that matches with specific rules (we can also use regex) and save it wherever we want (on files, on SQLite, MongoDB, Punched Cards, etc).

Just to get started, try (and modify it to play with regex) in Linux terminal:

You can see some examples on GitHub I created for this article, hoping to help you to create your own tool! Feel free to share your ideas!